Over the last week or so, a team of us in the office have been testing and bug fixing the Version 1.0 release of the Biological Data Recording System (BDRS), software we are developing on behalf of the Atlas of Living Australia. To me, this has felt like an epic sprint event.

First off – why? Well, for the last two years, we’ve been working from our local Subversion repository for the BDRS, and then updating the Google Code repository as we go. To date, the implementations of the BDRS have been made up of incremental builds that have been pushed up to Google Code, but have not really gone through a rigorous release cycle. However, as this software is now starting to reach a level of maturity (i.e. it’s not dramatically changing as much), we are moving towards a a more rigorous release cycle, pushing versioned software up to the Google Code repository, as well as continuing to publish the latest features as we build them.

This also reflects the efforts we’re putting into building a community around the BDRS – we will be just one of many developers (hopefully!) working on this software, directly from the Google Code repository.

Benny and AJ (pre-leave) had a big whiteboarding session and then set up a workflow document in Google Docs. This is a useful way to use Docs – when there is collaboration required, then that’s when we roll out a Google Doc (although, because we can’t get more than an ADSL2 line to this office, when our Internet drops, that causes problems). The workflows were left really quite open (e.g. “Test the My Sightings page”), so as to not corral the testing, and in practice because we just didn’t have the time to elaborate on them! As with any commercial contract, we have limited resources, so the plan was to test the system from end to end, and then to report any bugs, clarifications or style issues in our Mantis bug tracking system.

Benny and AJ (pre-leave) had a big whiteboarding session and then set up a workflow document in Google Docs. This is a useful way to use Docs – when there is collaboration required, then that’s when we roll out a Google Doc (although, because we can’t get more than an ADSL2 line to this office, when our Internet drops, that causes problems). The workflows were left really quite open (e.g. “Test the My Sightings page”), so as to not corral the testing, and in practice because we just didn’t have the time to elaborate on them! As with any commercial contract, we have limited resources, so the plan was to test the system from end to end, and then to report any bugs, clarifications or style issues in our Mantis bug tracking system.

This approach does have downsides – we didn’t have a documented set of test cases to exercise every feature in the BDRS. Given that the BDRS has a large amount of complexity and functionality in it, we were always going to struggle to get this level of documentation and planning done for this test phase, but we wanted to make a start documenting our tests as part of this release. I know that this will upset some developers to hear how we did this – but frankly, with limited resources and a deadline, we had to take a pragmatic approach to these things, and find ways in which our process can help build the necessary documentation over time. As an example, all of our Mantis issues have steps to reproduce the bugs – these will be extracted and compiled into the start of a test script and test plan for future releases, and we’ll likely incorporate Selenium as well to help automate those.

Anyway, with this approach agreed on, the team was assembled by AJ before he headed off for leave, which included:

- Benny – as the project manager

- Steph – as a ‘fixer’

- Aaron – as a ‘fixer’

- Debbie – as a ‘tester’

- Me – as a ‘tester’

Each of us has a really different way of dealing with our roles, but what I found most useful was the way the whole process worked out.

Benny paired us up as tester and fixer (Debbie & Aaron, Piers & Steph) and we worked off local builds of the BDRS on the fixer’s desktop machine. That way we could get much faster turnaround of bugs, and see changes much quicker. It also meant that we had two different deployments (three, actually, as Benny also had one), and we used this to also test deployment and configuration issues.

Debbie and I started testing, and we tested it hard. Debbie was unfamiliar with the system at the start of this process, and I was much more familiar. So these two viewpoints really complemented each other.

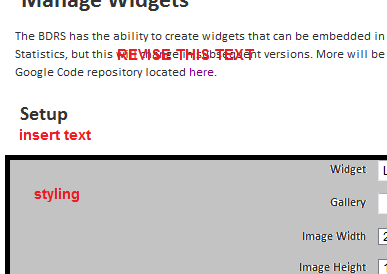

After we recorded the bugs in Mantis (including the steps to reproduce them, to make resolution easier, and a range of screenshots with our mockups in Paint attached), Benny would triage each of them, before assigning them to Aaron and Steph. As Benny has a good knowledge across most of the functions in the BDRS, he was ideally placed to run with this role. So, Benny assigned each bug with a severity:

After we recorded the bugs in Mantis (including the steps to reproduce them, to make resolution easier, and a range of screenshots with our mockups in Paint attached), Benny would triage each of them, before assigning them to Aaron and Steph. As Benny has a good knowledge across most of the functions in the BDRS, he was ideally placed to run with this role. So, Benny assigned each bug with a severity:

- Block – these are the bad ones, where thay cause major issues in the software and have to be fixed for v1.0

- Major – these need to be fixed before the v1.0 release – but don’t corrupt or crash the system

- Minor – these are optional ones – things like content changes, styling, etc that don’t really affect core functionality

- Trivial – if you’re lucky we’ll get these done, but they’re not the focus (these are really minor changes)

Once these bugs were assigned a severity, Benny then assigned them to either Steph or Aaron, depending on the tester that found them (and their knowledge of the BDRS). As a result, I’ve become the bane of Steph’s existence, because with my obsessive nature, I found a lot of bugs. This is also due to my familiarity with the system – I think I know what the system should be doing, so I reported things that didn’t gel with that familiarity and recollection (although I’ve been wrong and the team brought me up to speed with decisions that had been made that I didn’t know about). Anyway, Benny had to also balance my output against what could be achieved, and balance out these assignments across Steph and Aaron.

Steph and Aaron then got stuck into fixing the bugs, which involved several steps to ensure a quality outcome. They’d fix the issue on their local build, then reassign it back to the testers to check it works as designed. We’d check it, then if it was OK, we’d accept it. Then the code would get a review (with Benny) to provide an second level of review before it was committed into the codebase. Then once it hit the codebase, various systems like Jenkins, Findbugs and Sonar get started on the automated testing and static analysis.

The bugs went through a variety of Status entries in Mantis to reflect how they were progressing through this system as a result.

- Assigned – when lodged by the testers, they were immediately assigned to Benny to triage. Once triaging (i.e. setting the Severity as above) was done, Benny would assign to a fixer.

- Acknowledged – when a fixed starts working on an issue, they marked it as acknowledged, so at a glance, Benny could see who was working on what.

- Feedback – when assigned back to the tester to verify, the issues were marked as requiring feedback. If they passed, then they went to the next category, Resolved, otherwise, they were reassigned back to the fixer

- Resolved – once the tester was happy that the issue was fixed, it was marked as Resolved and then assigned back to the fixer. The fixer then undertook a code review with Benny to knock these issues off, and then commit them back to the code base.

- Closed – once committed, the issue was set to Closed.

So there was a lot of work being done around the process, and the systems we have in place (Subversion, Jenkins, Mantis, Findbugs, Sonar, Google Code and even Google Docs) worked well to support this. When you couple this approach with focus meetings to catch up on how we are progressing, this really delivers a good, open experience for all the people involved in the process.

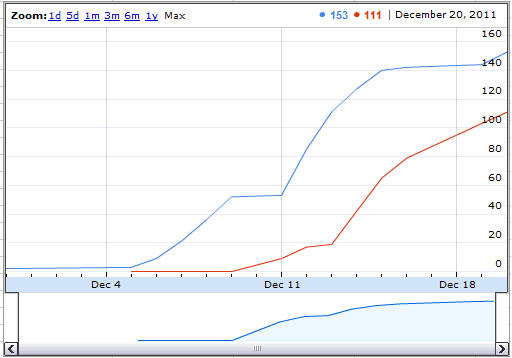

Did we fix every bug in the time we had? Of course not. It’s not uncommon for software to ship with known issues in them (that’s what release notes are for), and we have a plan over the coming months to have more releases going out to address these. However, I’m very proud to say that all of the blocking issues (only five!), all of the major issues (30 exactly), and an additional 76 ‘minor’ bugs were all addressed in the release in some form – the majority through fixing and a couple of edge case bugs were addressed at this stage with release notes. But productivity wise, we achieved a lot in a short timeframe of just one week of solid testing and fixing – 111 squashed bugs!

Our tracking chart, showing bugs found (blue) and bugs squashed (red)

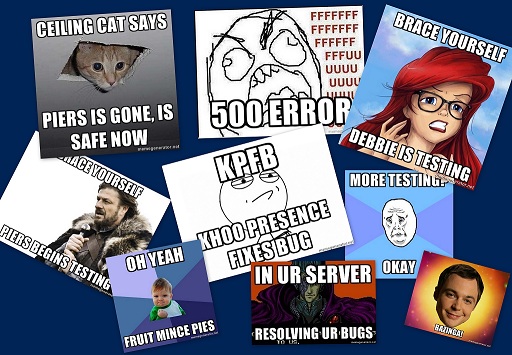

Testing is something I actually enjoy doing. I like seeing final products get better so quickly, and I like digging into the detail of systems and trying to break it (using my tried and trusty methods that include SQL injections, and the good ol’ “hold down shift and press all the buttons on your keyboard” method). Along the way, in what could have been a dry, dull experience, we really had quite a bit of fun along the way, as these images we were throwing at each other during the testing show…

Anyway, version 1.0 of the BDRS is now up on the Google Code repository, ready for people to take and upgrade their existing one, or to get started on the system. We’ll have more releases coming in the near future, and here are some ways to get started:

- Read the installation guide first. This will tell you what the requirements are for your servers and systems, and will let you know what sort of skills you need.

- Take the version 1.0 code (you can see it here, or check it out using Subversion via: svn checkout http://ala-citizenscience.googlecode.com/svn/tags/releases/1.0 <target-folder>) and get started with it. While you’re there, familiarise yourself with the Admin guide as well as the Release notes. The Admin guide has some things in it that you need to be aware of in terms of how to set up a secure site.

- Send us an email if you get stuck. We’re on skeleton staff now for the rest of the year, and will be closed from Thursday 22nd December and back on 3rd January, 2012. When we’reback, we’ll be able to help where our resources permit. Alternatively, you can always engage us to put a system in place for you… 🙂

This has been a big couple of years of development on the BDRS. It’s great to see it reaching maturity and start to really get some traction out there. As I wrote in the release notes, the future for it looks really bright – there are another six months of work still to come on getting this really up to scratch, and we have commercial contracts to do additional development – which will be committed back to the open source codebase.

I must be some sort of masochist – I can’t wait for the next round of testing – because that means we’ll be at v1.1 already!

Fire me off an email, leave me a comment, or send me a tweet if you want to know more.

Piers

Comments are closed.