We went out again last Friday for another field test of the mobile components of the Citizen Science software we’re building for the Atlas of Living Australia. You can see our blog article on the previous testing here.

This time, we went out with the express purpose of testing the recording forms on a range of mobile devices. We used a range of different devices on this trip, including an iPhone 4, a Galaxy Tab, a HTC Desire, and an iPad. Some of these were using the wrapped (with Phonegap) version of the mobile HTML5 application, and a couple used the HTML4 version in offline mode. The team have been working hard on implementing a range of new functionality on the forms, and we really wanted to put them through their paces, so off we went…

We had a go at recording a bit of video of the testing as well…

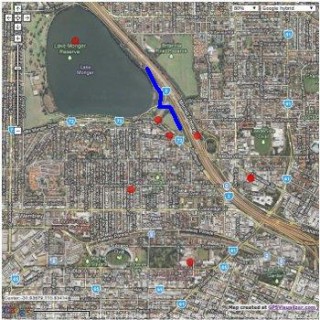

The results of this testing were quite interesting. Firstly, let’s look at the co-ordinates that were recorded on AJ’s Galaxy tab using the GPS on the device.

The red dots are where the device recorded records from, and the blue line is where we actually walked. This is a pretty inaccurate set of points! Again, as we found with Emergentweet, the on-board GPS needs to have some more filtering before you accept the points that it records. Positional accuracy is going to be a big problem until we get this right in the software – again, we’ll be looking to – at the very least – record accuracy of the GPS and preferably prevent recording when it’s below a certain value.

Photos were also something we played with. We recorded a White-Faced Heron on the survey, and we got a few images of the bird from different devices. First up, AJ’s Galaxy Tab again (click for actual size recorded):

And Kehan, on his phone (again, click for actual size):

And finally, me, using a Canon EOS 400D with a zoom lens (click for the 1024×768 I resized for my Picasa album):

And finally, me, using a Canon EOS 400D with a zoom lens (click for the 1024×768 I resized for my Picasa album):

Obviously, we didn’t use all the same hardware, but there is a vast difference between these photos (somewhat exacerbated with my choice of sized for the smaller versions!). Kehan’s was also corrupted somehow, and the original files show that same grey banding. So, especially when you look at AJ’s very small bird in the middle of that picture, you can see that on-board cameras are going to be of dubious use for validating records.

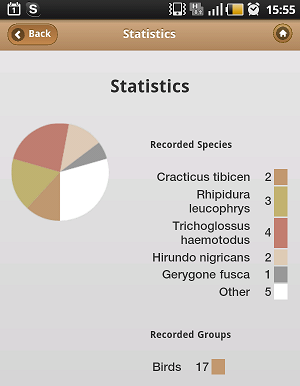

What was also very interesting to me personally was some work Ben Khoo has been up to in the mobile apps about generating statistics from your records on the fly. The first cut does a couple of pie charts of what you’ve been recording, but there’s quite a bit more on the horizon for the statistics abilities of this software. But for now, even this directly after you finish your survey was quite cool…

The end result of the testing was that we came back and tore down the trip, including doing a SWOT analysis of where we are at. The over-riding aspect of the software that the team will be working on is the performance of the system, especially the autocomplete fields for species names. A bunch of other usability issues were identified, and we have got some great ideas to implement thanks to a half hour of wandering around in the field… once again this really validated this as a useful way to test the software.

I can’t wait to see where we end up in the next round of testing!

Piers

Comments are closed.